By Gennady Krizhevsky

Version 1.0.8

Table of Contents

| ODAL Persistence Framework |

| Summary |

| Preface |

| Motivation |

| Some thoughts on intrusiveness |

| ODAL approach |

| First ODAL Application |

| How To Write Maintainable Applications |

| Basic Ideas |

| High Level Architecture |

| Quick Guide To ODAL |

| ODAL Configuration And Descriptors Format |

| What Is SDL? |

| Install ODAL |

| Generate Basic Persistent Object Descriptors |

| Generate Basic Persistent Objects |

| Create Composite Persistent Objects Descriptor (Optional) |

| Generate Composite Persistent Objects (Optional) |

| Generate Domain Objects and Mapper (Optional) |

| Create DAO Classes (Model 1) |

| Create DAO Classes (Model 2) |

| Instantiate Persistency |

| Put It All Together |

| Using Stand-Alone DataSource |

| Advanced Features Guide |

| Persistent Objects |

| Composite Persistent Objects |

| Enabling Compound Features |

| Enabling Complex Features. Object Trees |

| Many-To-Many Relationships |

| Circular And Bi-Directional Dependencies |

| Combining Compound And Complex Features |

| Other Types Of Persistent Objects |

| Object Serialization |

| Transactions |

| Transaction Listeners |

| Joining Transaction Managers |

| JTA Transactions |

| Primitive Transaction Manager |

| Working With Persistency |

| Retrieving Persistent Objects |

| Loading Persistent Object |

| Query-By-Example |

| Queries |

| Enhancing Query-By-Example |

| Query With Joins |

| Query Returning Data From Multiple Tables |

| Sub-Queries |

| Union Queries |

| Lazy Object Loading |

| Transforming Query Results While Retrieving |

| Query Trees |

| Query Returning Single Value |

| Count And Exists Queries |

| Paginated Queries |

| Query Serialization |

| Query Context |

| Inserting Persistent Objects |

| Pipe Insert |

| Updating Basic Persistent Objects |

| Updating Composite Persistent Objects With Compound Features |

| Updating Composite Persistent Objects With Complex Features |

| More Complex Updates |

| Deleting Persistent Objects |

| Delete-By-Example |

| Modify Queries |

| Pessimistic Locks |

| Optimistic Locks |

| Batch Modify Mode |

| Raw Query Approach |

| Ad-Hoc Modify Statements |

| Pipes |

| Understanding ODAL Data Types |

| Creating Custom Type Handler |

| Creating Custom Column Types |

| Binary Types |

| Key Generators |

| Rule API |

| Generating Persistent Objects From Ad-Hoc Queries |

| Executing Stored Procedures |

| Generating Persistent Objects From Calls |

| Object Caching |

| Persistency Cache Factory |

| Multi-index Cache Factory |

| Cache Integration With ODAL |

| Caching Query Results |

| Query Retrieving Sparsely Populated Objects |

| Oracle SQL Loader Persistency |

| Enabling Logging |

| Using Mapping Persistency (Model 2 Approach) |

| Select Queries With Model 2 |

| Insert And Delete |

| Update |

| Ad-hoc Queries |

| Mapper |

| Code Generating Tools |

| SQL Command Line Tool |

| Examples |

| Integration |

| Terracotta Cache Clustering |

| Spring Framework |

ODAL stands for Objective Database Abstraction Layer. It is a framework to communicate with a persistent storage. It is simple and open to developer since we strongly believe that developer is an intelligent link when it comes to building business applications. ODAL heavily relies on usage of interfaces to shield the developers from the complexities of the framework and to allow for development of plug-ins. ODAL is an ORM framework since it can map relational database tables to application objects. ODAL also supports features usually associated with ORM frameworks such as “lazy” instantiation, ability to retrieve object graphs and to properly express “table inheritance” in terms of Java classes.

Major priorities while developing ODAL were set to maintainability, simplicity, flexibility and performance. Exactly in that order.

Rephrasing Mark Twain, one can say: writing a database access layer is easy, that's why we have so many of them. In reality, it reflects complexity of the problem and the fact that there is no solution that ultimately closes it.

What is different about ODAL from the majority of the similar frameworks on the market? Several things.

As we already said, it focuses on maintainability since we strongly believe in the applications afterlife. It is developed with understanding that modern application is a complex beast and there is a need for business-like transactions as well as batch procedures, report-like ad-hoc queries and so on. It does not use any type of metadata descriptors at runtime. It has minimal dependencies on other libraries. In fact, all the dependencies at runtime are optional except for the JDBC drivers. It does not use Java reflection API. It has minimal overhead as compared to regular JDBC when executing database calls (do not trust those results showing ORM code executing faster than JDBC – they are either using cached ORM objects or non-prepared JDBC statements). It is not “too smart”, meaning it does what it says. For example, if you say “give me object from the database”, it will go to the database to get the object, caching, even though integrated, is done externally, dynamically controllable at runtime. And finally, it comes with the methodology which will be explained later.

To efficiently work with ODAL you have to use the generated objects and extend them if necessary. ODAL generated persistent objects are Java Beans but rather smart ones. They also contain minimum utility methods and they do not save or retrieve themselves from the database.

Generated objects remember their initial state. So you can, for example, select them in one thread and update in another without reloading them from the database if you deem it appropriate.

When dealing with real life business application, J2EE or not, it is commonly accepted that we have to deal with Domain Objects. Domain Objects represent your business or Domain Model. When communicating with external systems or components that know nothing about your Domain you may need another set of objects – so called Data Transfer Objects. Depending on structure of the database that may or may not reflect you Domain Model, you may also need the 3rd set of objects – Persistent Objects, the ones that can be persisted using some persistence framework. For some applications all 3 sets may coincide. For more complicated ones you may need all 3 of them. Interesting that in ORM world the 3rd set of objects is usually omitted since it is assumed that you can map the Domain Objects directly to the database. In reality, sometimes it is true, sometimes it is not. Occasionally, you will find that your database was designed to keep generic kind of objects and has very little to do with the Domain Model.

ODAL is build with this simple fact in mind and is concentrated on the Persistent Objects that, as we said, may or may not coincide with the Domain Model. Of course, we assumed that we do not use plain JDBC since it is still much easier to write converters between Java objects than to clutter application with plain JDBC code.

Nowadays great popularity gained so-called POJO based approach to designing persistence frameworks. POJOs are Plain Old Java Objects – the ones that do not inherit to any specific class or implement any framework dependent interface. So you define your Domain Model objects, map them to database tables and persist them. This is called a non-intrusive approach. It is quite nice and nobody in sound mind would argue with that. However, when it comes to implementing this approach, let us say, in Java, it is usually done through byte code manipulation, tons of XML or the latest invention – annotation mappings that are kept right in the Domain Objects (DOs).

In our mind byte code modification (or runtime code generation, for that matter, meaning usually that your objects get replaced by some other ones), and annotations kept in DOs are not really compatible with the non-intrusiveness or POJO, for that matter, paradigm. Byte code manipulation also complicates debugging since your source code does match the compiled one.

It is worth to mention that byte code manipulation became so fashionable that probably every 9 of 10 new open source Java projects are based on it. The danger is that if you combine couple of such frameworks in the same project you may end up with all kinds of mysterious problems that will be hard to discover.

With ODAL we always build our Persistent Objects (POs) first – they all inherit to a common parent. After that if the database reflects our domain model, we have 2 alternatives – either to derive the DOs from the Persistent ones or to build a separate set of DOs that in this case can be POJOs. If the database does not reflect our business we cannot derive the DOs from the Persistent Objects and we have to build them separately.

We generate our Basic Persistent Objects (the ones that map to the database tables) out of the database, create composite objects (see below) out of the first ones having all the structures predefined before the application run time. That allows ODAL to perform all the persistent operations without any byte code modification or even use of the reflection API (at least when dealing with POs). This approach brings more control, “hard ground”, if you will, to the application – nothing is created out of “thin air”. You can always review code before it gets executed, and many problems can be discovered during compilation. One more thing to consider that even though reflection does not impose a severe penalty anymore, as it was with earlier versions of Java, for some applications the overhead can be considerable. Later on, we will show example persistence that saves objects to sqlLoader files instead of the database. In which case, otherwise small contributors to CPU time become conspicuous.

Any framework endorses certain things and restricts others. This is a positive thing when the methodology is right. ODAL as a framework is not an exception. For example, ODAL consistently prefers Java code to XML, other descriptors or the annotations, for that matter, at runtime. At runtime everything that can be defined or precompiled – should be. It is some kind of strict typing, if you like. We believe that this approach delivers more maintainable code. This includes, by the way, SQL queries. If you want to create an SQL statement, you should not do it as just a string. Why? Because if you change the database even a little, your queries will start failing at runtime. The endorsed approach is to use generated column constants together with Query API, described later. In that case, if you change the database and re-run your code generating scripts the worst thing that can happen - the application will not compile.

Pleasant consequence of ODAL's “precompile-what-you-can” approach is very short startup time. It may not seem extremely important but when you will have to run hundreds of functional tests all of which initializing persistence framework you will learn to appreciate that.

If the POs closely match the Domain Model we can either inherit our DOs from the corresponding POs (see Model 1 approach) or to build a separate Domain Object set. If we choose the latter, and the database reflects our Domain Model, we generate a separate set DOs (POJO beans) the the default mapper between POs (see Model 2 approach).

Martin Fowler in his book “Patterns of Enterprise Application Architecture” mentions several database abstraction layer design patterns that are appropriate depending on size of the application. The problem in real world that the application may start as a small or medium one and over time grow into a very large. Usually, this switch would require a big refactoring including the persistence layer replacement. ODAL allows you to shift from “Transaction Script” approach to “Active Record” to “ORM” like one smoothly.

Words are words but as they say: “Show me the money!”.

Here you are. The 1st application with ODAL. Not really a “HelloWorld” but - “HelloOdal”:

package com.completex.objective.persistency.examples.ex001;

import com.completex.objective.components.log.Log;

import com.completex.objective.components.persistency.PersistentObject;

import com.completex.objective.components.persistency.core.adapter.DefaultPersistencyAdapter;

import com.completex.objective.components.persistency.transact.Transaction;

import java.io.FileInputStream;

import java.io.IOException;

import java.sql.SQLException;

import java.util.Properties;

public class HelloOdal {

public static String configPath = "examples/src/config/hsql.properties";

public static void main(String[] args) throws IOException, SQLException {

if (args.length > 0) {

configPath = args[0];

}

Properties properties = new Properties();

properties.load(new FileInputStream(configPath));

DefaultPersistencyAdapter persistency = new DefaultPersistencyAdapter(properties);

Transaction transaction = persistency.getTransactionManager().begin();

persistency.executeUpdate("CREATE TABLE EX_HELLO_TABLE (NAME VARCHAR(30))");

persistency.executeUpdate("INSERT INTO EX_HELLO_TABLE VALUES ('Hello ODAL!')");

PersistentObject po = (PersistentObject) persistency.

selectFirst(persistency.getQueryFactory().newQuery("SELECT * FROM EX_HELLO_TABLE"));

printResult(po);

persistency.executeUpdate("DROP TABLE EX_HELLO_TABLE");

persistency.getTransactionManager().commit(transaction);

}

private static void printResult(PersistentObject po) {

System.out.println(" Congratulations: your 1st data retrieved from the database: by index: "

+ po.record().getString(0));

System.out.println(" Congratulations: your 1st data retrieved from the database: by name : "

+ po.record().getString("NAME"));

}

}

What did we just do? We created a table, inserted some data into it and retrieved it into generic PersistentObject. As you can probably guess from the code, PersistentObject contains a Record from which the values can be accessed either by index or by name.

This is an example of the “Transaction Script” approach. All the configuration – database connectivity properties.

Good applications live longer. That is a fact, and the emphasis here is on live. That means that the applications are under steady development even at the stage of maintenance. In a way, maintenance is the most important part of the application life since it is the longest one. One of the features of this period is that the development during it is trickier and riskier since the application is already in production.

It is interesting to observe the evolution of ORM frameworks in retrospect. They were created with a very noble idea in mind – to hide the complexities of databases from application developers. The developers would map their application objects to database tables, using some kind of XML descriptor, the framework would use it to do the operations with the object graphs, which, in turn, would be translated into the database operations. No SQL whatsoever. The reasoning was simple - 95% of code in the server application can be dealt with this way. We would argue that 95 is a bit high but, anyway, here comes the notorious 80/20 (or, in this case, 95/5) rule. People realize that it is hard to live without SQL. So, here the ORM frameworks lost their purity, they introduced kind of SQL. In the beginning, it was just a dialect of “object query language”. Soon though it became obvious that it is not enough, and they started supporting “native queries” also.

ORM XML descriptors evolved over time also. As any ORM framework documentation would claim, it is not hard to write the XML descriptor mapping fields to columns and linking the objects into the object graphs. And, it is really not ... if you have, let's say, 5 tables with 4 columns each. But once you have 100, 200 or, as some larger applications do, 1000 and more tables with 20 or more columns each, it gets challenging to do even through a visual tool. No wonder that nobody liked it. “It is not a problem”, said ORM developers, and introduced code generating tools. The tool would generate the XML descriptors and the objects out of the database since, as we mentioned before, the databases, especially the big ones, are rarely created without PDM tools and as a rule come to existence before the application based on it. This, by the way, should not be surprising since the databases are part of infrastructure, architecture wise, and the applications are part of processes. Given that you can build any number of processes based on the same infrastructure, the infrastructure comes first. Generating XML descriptors and the objects was probably the only logical and viable thing to do for a medium-to-large scale applications. However, in a way, it defied the main idea of the “object-relational mapping” since if the objects are generated out of a database, their structure is pretty much defined by the structure of the database. Code generation is not an integral element of ORM ideology. It came rather as a patch to it. And it did not really solve the XML descriptors maintainability problem. You can generate your descriptors and the objects, however, once you touched them – they are yours. And it is worth to note that supporting tons of XML is much harder than supporting tons of Java code. Now, imagine that you have another ton of either externally or internally kept SQL or OQL strings that reference that thousands of fields or columns and what happens if you decide to make a change in your database or object structure, for that matter. Reality, as anyone knows, is even worse as you would probably have several groups of developers modifying the database simultaneously.

What about the annotations based mappings which seem to be the recent direction that ORM mainstream has taken? In our opinion, it makes the situation even worse since not solving the maintainability problem it introduces coupling with the framework which XML based approach did not have.

Database centered application is different from a self-sufficient one in at least one but very important aspect – your code resides in 2 different places. Any developer knows how hard is to maintain redundant code synchronous even within the system written in the same language. It is especially difficult in case of 2 different not even languages – tools. To do it efficiently, one has to use some methodology. Let's say we do the change in one place and then propagate it to another using some automation. Being more specific, we modify the database first, to be consistent with what we said before about the infrastructure, and after that we regenerate our application artifacts. With such an approach, the code generation becomes not just an optional add-on but an integral part of the methodology. We admit that not everything can be and should be generated out of the database and we will discuss it later. Here is another thing, if we regenerate the descriptors and the objects every time the database change happens, how does it combine with the manual work that, as we admitted, may take place? We do it by “separating concerns”. We split the descriptors and objects into ones that cannot be modified by hand and those that can be.

It also should be mentioned that going another way – generating database scripts or directly modifying the database from the Domain Model – is much harder. One of the reasons is that databases may contains a lot of parameters that simply unknown in the Domain world, like storages, tablespaces, and so on. In addition, the way from the database to Java, for instance, is better defined that the way back. For example, if you have Clob or varchar2 in Oracle you can always map it to a String, but if you have String you cannot know what Oracle type to use for it because if String contains more than 4000 characters (and this number may be actually version dependent) it has to be mapped to a Clob. Of course, we can always map Strings to Clobs paying a big price in performance. One more thing to keep in mind – databases live by their own rules and determining what data element go to which table involves performance considerations. Disregarding the latter is not an option since database in the vast majority of cases is the performance bottleneck. Ideally the Domain Model design should be always performed with the database design in mind.

One of the sources of poor maintainability – SQL stored as strings inside the code or externally in the files. From the experience, SQL stored in files is good for testing but not for a production application. The alternative would be: wherever it is possible avoid using SQL at all, wherever it is not – generate symbolic constants corresponding to field names and use them in code to produce the SQL instead of strings or external files in cases when SQL is necessary. Try to modify or drop some field/column names and you will appreciate the advise. If you follow it the worst thing that may happen - your code will not compile, the problem that is much easier to fix than plowing through the strings or files in search of those needing the modification. And the argument that it is “cleaner” to store your SQL in files we are not “buying” since you can always separate you queries using pure Java as well.

Let's talk about simplicity of the application and persistence frameworks. Nobody argues that simple code is better than complex one doing the same job. Sometimes simplicity of the application is mistakenly measured in lines of code. That's true that unnecessary code complicates its readability. At the same time, it is also true that more code in many cases can improve it. Actually, that's kind of obvious. Let's ask ourselves another question. Do we care about internal complexity of a persistence framework we are using? The answer is not that obvious. As long as it does the job why should we? Surprisingly, it can affect us. Sure, the readers are very good programmers, never make mistakes but occasionally, out of curiosity, they can still launch the debugger. Would you be surprised if, say, after loading the object from the database you would find all its fields empty? I know – I would be. And pretty annoyed too. That's exactly what can happen when you use some of the “non-intrusive” ORM frameworks since occasionally they modify the byte code. Now, imagine the situation when you are fixing a critical production bug, launch the debugger and ... . Sometimes, complexity of the framework can expose itself in some other ways - long startup times, for instance. While evaluating the framework it usually does not seem that important. Yes, it takes 2 minutes to start it, so what? It does it only once - on the application launch. And, we would add, on the launches of all your functional tests. Now again, imagine yourself fixing a critical production bug ... . By the way, occasionally, you will find that you have to restart your application server and the startup time is important. Or you write an application that is launched as a cronjob with the time interval compared with the startup time itself.

We should say more about simplicity in its relation to the persistence frameworks. How to keep it simple? One of the ways – is not to use hard to understand features, try to avoid code based on the framework specific behaviour. Here is the example. Some people would say that great advantage of the ORM frameworks is that if you ask it for the same object twice during the transaction you would get the same instance of it. And, some may add, it is called transactability (we realize that this is not a dictionary term – at least not yet – but it is commonly used and means “transaction like behaviour”). It's definitely not transactability but rather an attempt to simulate a “serializable” transaction isolation level. The question is though – would you expect this type of behaviour from the ORM tool? Not necessarily, if you ask the database for the object twice in the same transaction it will just retrieve it twice. Now, some may misuse this knowledge and write code like:

openTransaction();

int id = 1;

f1(id);

Person p = f2(id);

persistency.save(p);

closeTransaction();

private Person f1(int id) {

Person p = persistency.get(id);

p.setName(“John”);

return p;

}

private Person f2(int id) {

Person p = persistency.get(id);

p.setGender(“male”);

return p;

}

If our goal was to update a Person with a new name and gender then the first impression - it's done incorrectly, that only the gender field will be updated. Does the persistence go to the database twice? “No”, the expert would say, “We get the same instance of the Person every time as long as we are in the same transaction”, and will be very satisfied with himself. In our mind though, much better would be code like this:

openTransaction();

int id = 1;

Person p = persistency.get(id);

f1(p);

f2(p);

persistency.save(p);

closeTransaction();

private void f1(Person p) {

p.setName(“John”);

}

private void f2(Person p) {

p.setGender(“male”);

}

It's more intuitive and hence more maintainable. And, by the way, more efficient too.

When the EJB technology first came it promised to solve all the problems. And it solved ... some of them. But ultimately it failed. Proof of it - radically different successive EJB specifications. Why did it fail? The problem was in “do not care” attitude it started with. For example, “We do not care that it takes forever to run an EJB application – machine power is cheap and getting cheaper by a minute”. Or, “After this technology comes, companies will not have to care about the application developers anymore - with the architecture in place a monkey can to the job”. It was the “magic bullet” approach. As we know, it did not work, and developers are still around, and the good ones are still in demand. So, was the architecture wrong? Then why a great team of EJB architects did not find the right one? I hate talking banalities, but probably because there is no such thing that “kills'em all”. What to do then? Okay, maybe the right way is to create a set of technologies that intelligent architects and developers would combine and use to fit their needs. These technologies should be specialized and possibly simple. Actually, this is the tendency that takes place in the open source community.

Thus, the concept “simplicity is a virtue” applies to frameworks also. Not always this philosophy wins, though. Take, for example, object caching. Some ORM frameworks make cache (so called secondary one) internal to the framework. Should it be? There are several reasons why it should not. First of all, being internal it usually cannot sufficiently cover all possible caching scenarios. For example, in some cases one may need caching by surrogate key, and the natural one, and maybe, some other keys too. After that one may need to synchronize population and invalidation of those caches. Also sometimes on load of the class one may need to cache only specific instances to minimize the cache size. Moreover, there is another, non-obvious, side effect of internal cache use: it makes it look like no matter what silly things you do in your code, the framework will compensate, which is clearly not true. It therefore implicitly endorses sloppy DOs design, inconsistent caching policies, which, in turn, lead to potential erratic system behaviour and maintainability problems. The application should be designed in such a way that it is efficient enough even without caching. Caching should come as an optional add-on, and not as a must-to-have compensation device for the application or the framework (which is often the case) deficiencies. Keeping in mind that the ORM framework will likely be using external cache implementation anyway and that we target intelligent developers, it would be better to give them 2 frameworks and allow to combine it by themselves. Occasionally, one would need to make ORM and caching work together. Example is a distributed cache when you have to invalidate the values on commit (more precisely – after commit). For that purpose, the persistence framework should provide proper means of communication - transaction events listeners, for instance, letting the developers to hook up the 2 frameworks when necessary. Even though external caching will likely lead to more lines of code, it will also likely make the application better controlled and more maintainable.

ODAL is called Objective for a reason - it is based on specialized objects which stem from the PersistentObject class. As we saw in HelloOdal example, PersistentObject can be used directly. However, the normal way would be to create strictly typed Java Beans that inherit from the PersistentObject class. One of the main ODAL goals was to design an easy and flexible way to create and modify those objects which are deemed a foundation of an application success. And crucial idea here is in separating routine work from an intelligent one, relatively static components from frequently modified ones, and dividing the process into stages that allow developers input. It is like a “separation of concerns” pattern but applied in both spacial and time dimensions.

To achieve this goal the are separated into two essentially different types: basic and the composite (complex/compound) ones. The basic are those mapped one-to-one to database tables. The composite ones are compositions of the basic persistent objects.

This simple separation allows for gradual evolution of your application complexity and provides for effective use of code generation technique.

Generation of basic POs is done it in two steps. The first step is to generate persistent descriptors for the basic persistent objects. These descriptors always go in pairs – one “internal” and one “external”. The internal descriptor contains database metadata and it is never modified by hand. The external one contains metadata that may be modified by hand if necessary – it contains column types, foreign keys, value generators metadata, column aliases or future Java object field names. Some entries in both descriptors may overlap in which case the external descriptor ones take precedence. Modified entries of the external descriptor do not get overwritten in subsequent re-generations except for the cases when the entire column gets deleted from the database. The second step is to generate basic based on the descriptors we just described.

After the basic are generated we can optionally aggregate them into groups of composite persistent objects. It can be done either programmatically or through code generation. Generation of composite is also done in two steps. The first one is to write a descriptor that describes how these object relate to each other. This is the most intelligent step. It cannot be truly automated and should be done manually. The second step is a trivial one in which we generate our composite based on the descriptor.

As you can see, the process of persistent object generation involves several stages and creates several artifacts. After each step developers can interfere with their input. That gives them enough control over the process. Also, this mechanism allows to automatically propagate database changes to the code.

Working with generated is one of the central ideas of ODAL. The POs contain metadata about the tables they are mapped to. That allows using them to build queries without writing SQL. On another hand, do no save or retrieve themselves which provides for better reusability.

If you want to modify the generated to add your own methods you should either inherit from the generated POs or generate DOs and PO/DO mapper which will be discussed later.

ODAL creates an abstraction layer between an application and a persistent storage. A high level view of the architecture looks like that

The application uses ODAL and its generated artifacts to communicate with persistent storage.

Persistency is an object that performs all the operations regarding storing and retrieving persistent objects.

TransactionManager is an object closely related to Persistency that manages database and non-database transactions and sessions.

DOs belong to the Application layer but, as we already mentioned, they can be generated and mapped to the corresponding POs.

ODAL is currently using 2 formats for configuration files. One is usual Java properties file format. For more complicated configurations and the descriptors we use SDL (Simple Data Format) format.

SDL format was proposed by Andrew Suprun and presents a convenient alternative to XML. It supports the following data types: maps, lists, strings, numbers, booleans, timestamps and nulls. White spaces are used as token separators unless they are inside strings.

Map syntax: {<key> = <value>}

List syntax: [<value1> <value2> ... <valueN>]

String syntax: “<value>” or <value> if the value contains alphanumeric characters

Number syntax: <value> where value contains digits only

Boolean syntax: TRUE|FALSE

Timestamps syntax: @<yyyy-MM-dd'T'HH:mm:ss>|@<@yyyy-MM-dd>

Null value syntax: NULL

Single line comment syntax: #

SDL format contains much less tag “noise” than XML what makes it more readable. SDL works nicely for configurations and simple resource files where XML looks like an overkill. ODAL has an implementations for SDL reader and SDL writer. To build an SDL structure all you have to do is build a Map/List tree. SDL writer knows how to save it to SDL format. SDL reader, on another hand, reads SDL code into a Map/List tree.

Below is a sample of SDL code:

{

#

# Map:

#

generic={

po_config_path=somfile.sdl

cpx_desc_path=complex_test.sdl

intern_path=gen/src/java/sdl/persistency/oracle/internal_test.sdl

extern_path=gen/src/java/sdl/persistency/oracle/external_test.sdl

generate_interfaces = TRUE

}

#

# String:

#

filter_pattern="TEST_M|TEST_S"

#

# List:

#

values = [“value-1“ “value-2”]

}

Once you downloaded the binary distribution – unpack it. The root directory of the unpacked file structure is your $ODAL_HOME. You can choose to set it in your environment or not. We will refer to it for convenience down the road. Copy your database driver to $ODAL_HOME/lib directory. Note that $ODAL_HOME/lib directory contains a set of Java archives out of which only one – odal.jar – is mandatory at runtime. If you use JTA transactions you have to include also javax_jta-1_1.zip, and if you use commons logging – include commons-logging.jar. Of course, you have to include your database driver to the classpath as well.

Create your $CONFIG directory where you will keep you configuration files – it will likely be under your application directory tree. Copy persistent-descriptor-config.properties file from $ODAL_HOME/config/ref directory to the $CONFIG directory. $ODAL_HOME/config/ref directory contains templates for all the configuration files you may need. Modify the copied file appropriately. The most importantly, uncomment and set the database configurations.

Run

$ODAL_HOME/bin/desc-po.sh $CONFIG/persistent-descriptor-config.properties

command from console (this is UNIX example, there are corresponding commands for Windows if for some inexplicable reason you choose to run one). It will generate 2 descriptors, internal and external, necessary to generate basic persistent objects.

At this point you may choose to modify the external descriptor. The content of the file may look like

{

tables = {

CONTACT = {

tableAlias = CONTACT

tableName = CONTACT

columns = {

CONTACT_ID = {

columnName = CONTACT_ID

columnAlias = CONTACT_ID

dataType = LONG

optimisticLock = FALSE

exclude = FALSE

transformed = FALSE

# keyGenerator = {

# class = com.completex.objective.components.persistency.key.impl.SimpleSequenceKeyGeneratorImpl

# staticAttributes = {

# name = CONTACT_SEQ

# }

# }

CUSTOMER_ID = {

columnName = CUSTOMER_ID

columnAlias = CUSTOMER_ID

dataType = LONG

optimisticLock = FALSE

exclude = FALSE

transformed = FALSE

}

#

# Some columns hes been removed from this sample

# ..............................................

#

}

exclude = FALSE

transformed = FALSE

foreignKeys = {}

naturalKey = {

columnNames = []

naturalKeyFactory = {

# simpleFactoryClassName =

}

}

}

CUSTOMER = {

tableAlias = CUSTOMER

tableName = CUSTOMER

columns = {

........

}

}

}

}

As you can see the external descriptor contains set of table entries. Each entry has a table name as a key. Let us review CONTACT entry in details.

CONTACT – key of the table entry, coincides with the table name.

tableAlias – CONTACT, table alias. By default coincides with the table name. It is used as class or interface name core (it will be transformed according to Java convention with optional prefix and suffix being provided in the configuration file). Can be modified.

tableName – CONTACT, table name, should not be modified and given here for reference purpose only.

columns – columns entries key.

CONTACT_ID – 1st column key, coincides with the 1st column name.

columnName – CONTACT_ID, column name, should not be modified and given here for reference purpose only.

columnAlias – CONTACT_ID, column alias. By default coincides with the column name. It is used as generated Bean field name seed (it is formatted to Java standard). Can be modified. Enclosed in parentheses “(...)” it stays unformatted and gets interpreted as an exact field name (it should be also enclosed in double quotes since otherwise SDL reader does not interpret it correctly and will throw an exception).

dataType – LONG, ODAL column type (see ColumnType in API documentation). Data types are discussed in details later. Can be modified

optimisticLock – FALSE, boolean value indicating if this column is part of optimistic lock key. Can be modified

exclude – FALSE, boolean value indicating if this column should be excluded from the generated Bean fields. It is convenient when several versions of your application share the same development database. If you regenerate the descriptor from the development database that has additional columns you may exclude them without breaking the older production version that does not have them. Can be modified.

transformed – FALSE, systemic flag. Should not be modified.

keyGenerator – key generator entry. Comes as commented out. Uncomment, modify or add it as required (see detailed description below).

class – com.completex.objective.components.persistency.key.impl.SimpleSequenceKeyGeneratorImpl, generator class name (see detailed description below).

staticAttributes – arguments that will be set when initializing generator. See API documentation for attributes that can be set for a specific generator.

name – CONTACT_SEQ, sequence name

CUSTOMER_ID – 2nd column key

......

exclude – FALSE, boolean indicating if the table should be excluded from object generation. Can be modified.

transformed – FALSE, systemic flag. Should not be modified.

foreignKeys – foreign keys entry. If not specified, the one from the internal descriptor file is used. Can be modified. The easiest way to modify it is to start from one copied from the internal descriptor file.

naturalKey – entry describing key that is used in PersistentObject.toKey() method. If not specified or empty the default one is used. It includes all the primary key columns to the key. You can always override PersistentObject.toKey() method and provide your own key specification.

columnNames – [], list of column names used to form the key

naturalKeyFactory – key factory.

simpleFactoryClassName – simple factory class name. Key factory implementation provided by ODAL is "com.completex.objective.components.persistency.key.impl.SimpleNaturalKeyFactoryImpl". For compound consisting of several basic ones the resulting key is formed as a superposition of its entries ones.

What should you modify in an external descriptor? You should uncomment and modify name or class of key generators if you need them. Foreign key entries normally reside in the internal descriptor. However, if there is danger of dropping them in the database you should consider copying the from the internal into the external one. Occasionally, you will find necessary to change the data type of the column. For example, some JDBC drivers will not tell you if this column type can be interpreted as a BLOB putting more generic BINARY type instead. If you know that this database type can be correctly converted to BLOB by the driver you may decide to modify the generated type.

Once modified, the external descriptor column entries are not overwritten in subsequent regenerations. However, if the table or column is deleted from the database it will also be deleted from the external descriptor.

When generating objects, table and column aliases rather than names are used to derive class and field names of the generated classes. If you are not satisfied with default name conversion you can specify alias values enclosed in parentheses “(...)” and they will be interpreted as exact class or field names, respectively.

The tables keys in the external descriptor must match those in the internal one.

Since you cannot have duplicate table entries in the descriptors, in case you need another persistent object generated from the same table, you have to generate an alternative descriptor pair (or copy them from an existing one).

The data types mentioned in descriptor are internally supported ODAL data type. For the complete list of them please refer chapter Understanding ODAL Data Types. You can also create you custom types as it will be shown in the Advanced Features Guide.

You can also define transformer classes that can alias tables or columns, or redefine column type. To do that you have to create a plugin configuration file in SDL format (see persistent-descriptor-plugins-config.sdl template). Below is an example of such a configuration:

{

transform = {

transformers = [

{

class = "com.tools.BooleanModelTransformer" # Required, must be of ModelTransformer type

# config = {} # Optional

}

# NameModelTransformer

{

class = "com.tools.NameModelTransformer" # Required, must be of ModelTransformer type

# config = {} # Optional

}

]

}

}

This configuration file defines two transformers. Following are the sample transformers sources,

public class BooleanModelTransformer extends AbstractModelTransformer implements ModelTransformer {

protected void transformColumn(MetaTable table, MetaColumn column) {

if (column.getColumnName().endsWith("_FLAG")

&& ColumnType.isString(column.getType())

&& column.getColumnSize() == 1) {

if (column.isRequired()) {

column.setType(ColumnType.BOOLEAN_PRIMITIVE);

} else {

column.setType(ColumnType.BOOLEAN);

}

}

}

}

public class NameModelTransformer extends AbstractModelTransformer implements ModelTransformer {

protected void transformColumn(MetaTable table, MetaColumn column) {

if (column.getColumnName().equalsIgnoreCase("CLASS")) {

column.setColumnAlias("CLAZZ");

}

}

}

As you can figure out, the first one changes type of the column to boolean when the column name ends with “_FLAG”, and the second one aliases the columns named “CLASS” to “CLAZZ” to avoid Java object fields called “class” when generating Persistent Objects.

The last thing to do with regards to transformers is to point plugins_config_path property of persistent-descriptor-config.properties to the path of persistent-descriptor-plugins-config.sdl file.

Copy persistent-object-config.sdl file from $ODAL_HOME/config/ref directory to the $CONFIG directory. Modify the copied file appropriately.

Run

$ODAL_HOME/bin/po.sh $CONFIG/persistent-object-config.sdl

command from console. Review the generated classes.

If you need to work with object trees that are mapped to related tables and the tree elements should be retrieved and modified together, you may decide to create composite which are aggregations of the basic ones. Such aggregations represent master-slave relationships of two types – complex and compound. The complex one provides for weaker parts dependencies than the compound one. For example, if you want to implement “lazy loading” or simply get dependency type other than one-to-one then you have to do it though complex object feature. On another hand, if you want your composite to behave more like a single basic persistent object then you have to implement the compound object feature. Composite object, in fact, can have both complex and compound features as it will be shown below. You can create your composite objects descriptor from scratch but the best way is to start from the $ODAL_HOME/config/ref/composite-po-descriptor.sdl template. It contains rather detailed descriptions of the attributes you have to set are aggregations of the basic ones.

Copy composite-po-config.sdl file from $ODAL_HOME/config/ref directory to the $CONFIG directory. Modify the copied file appropriately.

Run

$ODAL_HOME/bin/po-cmp.sh $CONFIG/composite-po-config.sdl

command from console. Review the generated classes.

This step in necessary if you decide that you cannot or simply do not want to inherit from the generated POs.

Copy bean-config.sdl file from $ODAL_HOME/config/ref directory to the $CONFIG directory. Modify the copied file appropriately.

Run

$ODAL_HOME/bin/bean.sh $CONFIG/bean-object.sdl

command from console. Review the generated classes.

Depending on the model you adopted (including choice to work with basic ones or with composites) your DAO classes may look simpler or more complex. Following is an example of DAO class using composite persistent objects,

public class CustomerDAO {

private Persistency persistency;

public CustomerDAO(Persistency persistency) {

this.persistency = persistency;

}

public void setPersistency(Persistency persistency) {

this.persistency = persistency;

}

public void insertCustomer(final Customer customer) throws CustomerException {

try {

persistency.insert(customer);

} catch (OdalPersistencyException e) {

throw new CustomerException(e);

}

}

public void updateCustomer(final Customer customer) throws CustomerException {

try {

persistency.update(customer);

} catch (OdalPersistencyException e) {

throw new CustomerException(e);

}

}

public void deleteCustomer(final CpxCustomer customer) throws CustomerException {

try {

persistency.delete(customer);

} catch (SQLException e) {

throw new CustomerException(e);

}

}

public Customer loadCustomer(final Long customerId) throws CustomerException {

try {

return (Customer) persistency.load(new CpxCustomer(customerId));

} catch (OdalPersistencyException e) {

throw new CustomerException(e);

}

}

/**

* Load all the customers. Utilizes BasicLifeCycleController.convertAfterRetrieve method

* to pre-load otherwise lazily loadable Contact.

*

* @return list of customers

* @throws CustomerException

*/

public List loadAllCustomers() throws CustomerException {

try {

return (List) persistency.select(new CpxCustomer(), new BasicLifeCycleController() {

public Object convertAfterRetrieve(AbstractPersistentObject persistentObject) {

((CpxCustomer) persistentObject).getContact();

return persistentObject;

}

});

} catch (OdalPersistencyException e) {

throw new CustomerException(e);

}

}

}

The CustomerDAO class is taken from $ODAL_HOME/examples/src/java/com/completex/objective/persistency/examples/ex004/app

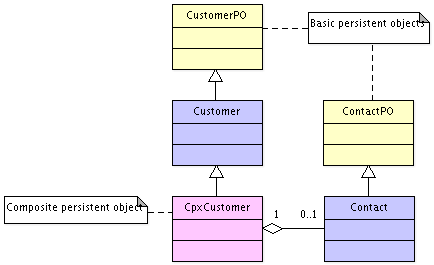

directory. Below is the class diagram of the ones used by the CustomerDAO. User defined descendants are Customer and Contact classes.

As you can see from the code CustomerDAO has reference to Persistency interface.

When working with the Model 2 you would generate 2 sets of classes – POs and DOs. Below is the example of DAO utilizing the 2nd model,

public class CustomerDAO {

private MappingPersistency persistency;

public CustomerDAO(MappingPersistency persistency) {

this.persistency = persistency;

}

public void setPersistency(MappingPersistency persistency) {

this.persistency = persistency;

}

public void insertCustomer(final CpxCustomer customer) throws CustomerException {

try {

persistency.insert(customer);

} catch (OdalPersistencyException e) {

throw new CustomerException(e);

}

}

public void updateCustomer(final CpxCustomer customer) throws CustomerException {

try {

persistency.update(customer);

} catch (OdalPersistencyException e) {

throw new CustomerException(e);

}

}

public void deleteCustomer(final CpxCustomer customer) throws CustomerException {

try {

persistency.delete(customer);

} catch (SQLException e) {

throw new CustomerException(e);

}

}

public CpxCustomer loadCustomer(final Long customerId) throws CustomerException {

try {

return (CpxCustomer) persistency.load(new CpxCustomerPO(customerId));

} catch (OdalPersistencyException e) {

throw new CustomerException(e);

}

}

/**

* Load all the customers. Utilizes BasicLifeCycleController.convertAfterRetrieve method

* to pre-load otherwise lazily loadable Contact.

*

* @return list of customers

* @throws CustomerException

*/

public List loadAllCustomers() throws CustomerException {

try {

return (List) persistency.select(new CpxCustomerPO(), new BasicLifeCycleController() {

public Object convertAfterRetrieve(AbstractPersistentObject persistentObject) {

((CpxCustomerPO) persistentObject).getContact();

return persistentObject;

}

});

} catch (OdalPersistencyException e) {

throw new CustomerException(e);

}

}

}

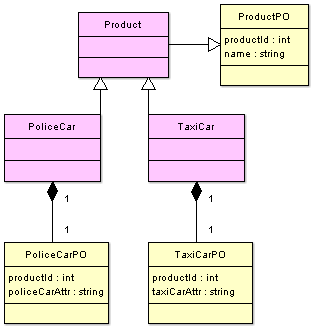

This CustomerDAO class is taken from $ODAL_HOME/examples/src/java/com/completex/objective/persistency/examples/ex004a/app

directory. Below is the class diagram of the ones used by the CustomerDAO.

Note that instead of Persistency interface this CustomerDAO class uses MappingPersistency interface.

The 1st example shows how to get Persistency instance using generic approach,

// Instantiate Persistency (1):

DatabasePolicy policy = DatabasePolicy.DEFAULT_ORACLE_POLICY;

DefaultTransactionManagerFactory tmFactory = new DefaultTransactionManagerFactory();

tmFactory.setDataSource(dataSource);

tmFactory.setDatabasePolicy(policy);

TransactionManager transactionManager = tmFactory.newTransactionManager();

DefaultPersistencyFactory pf = new DefaultPersistencyFactory();

pf.setTransactionManagerFactory(tmFactory);

Persistency persistency = pf.newPersistency();

It allows you to use externally supplied datasource and transaction manager.

The simplest way to get Persistency instance is to use DefaultPersistencyAdapter.

// Instantiate Persistency (1):

Persistency persistency = new DefaultPersistencyAdapter(properties);

It instantiates ODAL datasource internally. The properties that can be set in properties parameter are described by PROP_XXX constants of DefaultPersistencyAdapter class (see the API documentation). It is more appropriate though for small rather than enterprise applications.

You can also use alternative DefaultPersistencyAdapter constructor which accepts any externally supplied DataSource,

// Instantiate Persistency (2):

Persistency persistency =

new DefaultPersistencyAdapter(properties, dataSource,

StdErrorLogAdapter.newLogInstance());

If statement cache size is set to 0 the internal prepared statement caching is disabled as it should be in case the caching is done at the driver level or simply undesirable.

If you use model 2 approach you can instantiate MappingPersistency in the following ways.

// Instantiate Persistency (1):

MappingPersistency persistency = new DefaultMappingPersistencyAdapter(properties);

or

// Instantiate Persistency (2):

MappingPersistency persistency = new DefaultMappingPersistencyAdapter(persistency, mapper);

where persistency is instance of Persistency and mapper is instance of com.completex.objective.components.persistency.mapper.Mapper.

Following is an application example using CustomerDAO to create customers,

public void doIt() throws CustomerException {

Transaction transaction = persistency.getTransactionManager().beginUnchecked();

try {

createAllCustomers();

// Commit w/o returning transaction to the pool

transaction.commit();

} finally {

persistency.getTransactionManager().rollbackSilently( transaction );

}

}

private void createAllCustomers(Transaction transaction) throws CustomerException {

try {

......

CpxCustomer customer = createCustomer(“Macrohard”, “www.macrohard.com”);

Contact contact = createContact();

customer.setContact(contact);

customers.add(customer);

customerDAO.insertCustomer(customer);

......

} catch (SQLException e) {

throw new CustomerException(e);

}

}

private CpxCustomer createCustomer(String orgName, String url) {

CpxCustomer customer = new CpxCustomer();

customer.setOrgName(orgName);

customer.setUrl(url);

return customer;

}

private Contact createContact() {

Contact contact = new Contact();

contact.setFirstName("John");

contact.setLastName("Doe");

contact.setPhone("1-800-111-1111");

contact.setShipAddress("475 LENFANT PLZ 10022 WASHINGTON DC 20260-00");

return contact;

}

Let's take a closer look at how to correctly work with transactions. Note that there are commit and rollback methods on both Transaction and TransactionManager. The difference is that the first one does not release the transaction (does not return it to the pool) and the second one does. In such a way we can decouple database transactions (which are marked by Transaction.commit()) and business ones.

Note that TransactionManager has methods begin() and commit(...) that throw SQLException, and also its doubles beginUnchecked() and commitUnchecked(...) that throw RuntimeException which can be used alternatively providing for shorter syntax. Also there is a double for TransactionManager.rollback(...) method – TransactionManager.rollbackSilently(...). It does not throw any exception – only logs it. It is well suited for use in finally clauses since it will not shadow any previous exceptions.

ODAL comes with its own java.sql.DataSource implementation. It can be used independently and even compiled into a separate jar file if desired. It is simple yet powerful. It features configurable timeout on DataSource.getConnection() method (connectionWaitTimeout) which prevents deadlocks even with buggy programs. It also has optional check for bad connection (ping) capability, to remove bad database sessions from the pool, and prepared statements caching.

Instantiation of the data source is very simple:

DefaultDataSourceFactory dataSourceFactory = new DefaultDataSourceFactory(properties);

DataSource dataSource = dataSourceFactory.newDataSource();

Below is a sample properties file:

driver=org.hsqldb.jdbcDriver

url=jdbc:hsqldb:file:hsqltestdb

user=sa

password=

maxConnections=20

stmtCacheSize=100

connectionWaitTimeout=20000

checkForBadConnection=true

For other configurable parameters see the API.

All ODAL classes are either controllers (“doers”) or models (“descriptors”). Controllers know “how”, models know “what”. Models are all about data structures, agnostic of the way this data is stored or retrieved. On another hand, models are not just “any” Beans. The model classes are all descendants of the PersistentObject.

As we already mentioned, in ODAL fall into two major categories. The first one is basic persistent objects. These are the ones mapped one to one to database tables. The second category is composite or composites. They are compositions of the basic persistent object.

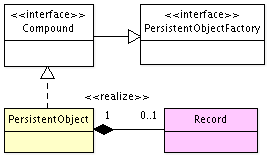

The main feature of the PersistentObject class is that it is not “flat”. If we take a look at its simplified class diagram below

we can notice that PersistentObject class contains Record instance. Record is the class where the most of the things “happen” for PersistentObject. Record contains PersistentObject's current and original state, database meta data, and so on. To a degree PersistentObject is a wrapper for the Record class. PersistentObject by itself does not have any data fields and the only access to data is through the Record class. Generated classes, on another hand, descendants of PersistentObject, have data Bean fields that are duplicates of the Record data. This design lets PersistentObject be flattened and unflattened. Flattened PersistentObject does not contain Record instance and can be used for either caching when application memory footprint is of importance or simply as a read-only data snapshot.

Record class contains meta data which can be accessed through its Record.getTable() method. Record class also contains original and current values of its fields. Record fields fields can be accessed either by name which coincides with that of column name in the basic persistent object descriptors or by index. Let's look at the following code fragment taken from one the generated persistent classes,

private Long customerId;

.....

//

// customerId:

//

public Long getCustomerId() {

return this.customerId;

}

public void setCustomerId(Long customerId) {

if (record2().setObject(ICOL_CUSTOMER_ID, customerId)) {

this.customerId = customerId;

}

}

public boolean izNullCustomerId() {

return record2().getObject(ICOL_CUSTOMER_ID) == null;

}

public void setNullCustomerId() {

if (record2().setObject(ICOL_CUSTOMER_ID, null)) {

this.customerId = null;

}

}

............

public Record record2() {

invalidateOnFlattened();

return record;

}

These are 4 methods that get generated for each persistent object field that is mapped to a database column. You can see that in setters the value gets set to Record and also to a field. Attempt to call any of field accessors or modifiers other than a getter on flattened object will cause OdalRuntimePersistencyException.

The field values can be set directly to the Record. In this case to synchronize Record with its parent PesistentObject, PesistentObject.toBeanFields() should be called. Whenever a setter method is called on PesistentObject field and the original field value is different from the new one the field becomes “dirty”. You can check if particular field is dirty by calling isFieldDirty(...) method of the Record class. You can check if record as a whole is dirty by calling its isDirty() method. If record has never been saved to or retrieved from database then any setter call makes field dirty. Only dirty fields get saved in database. Occasionally, you will find it convenient not to overwrite record's value with nulls. In this case you can set setNotOverwriteWithNulls(...). You also may not want to save records that have only primary key values populated. Use setSkipInsertForKeysOnly(...) method in this case.

PersistentObject class provides several copy methods that allow for copying one PersistentObject into another (see the API documentation).

Generated persistent object classes always have 2 constructors – the default one with no arguments and the one with primary key fields. When inheriting from the generated object remember to always implement the no arguments constructor.

What is the difference between basic persistent object and a composite one? Simple answer is that composite persistent object is the one that returns true from one of these methods: PersistentObject.complex() or PersistentObject.compound(). That means that composite is basic persistent object with enabled complex or compound features.

To better understand what compound object is we have to ask ourselves why would we need one?

Imagine a set of tables with one-to-one relationships that really represent one logical object and on Java level you would want them to behave like one. This is the situation were use of compound object is appropriate.

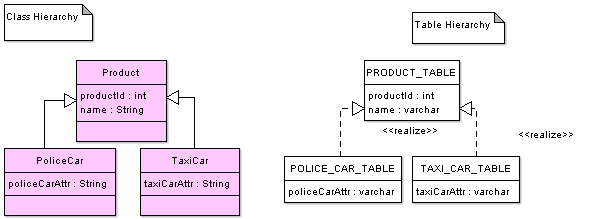

If we go deeper we can reminisce the theory of O-R mapping with regards to object inheritance. Martin Fowler mentions three patterns: Single Table Inheritance, Concrete Table Inheritance and Class Table Inheritance.

Single Table Inheritance uses one table to store all the classes in hierarchy. Concrete Table Inheritance uses one table to store concrete class in hierarchy. Class Table Inheritance uses one table for each class in hierarchy.

Let us consider how to model them in ODAL. Single Table Inheritance is trivial, you generate a basic persistent class and you inherit from it as many times as you want.

Concrete Table Inheritance cannot be reproduced in ODAL since ODAL generates separate class per table. However, it can be simulated by use of interfaces instead of classes.

Class Table Inheritance, the most meaningful pattern out of three, can be fully expressed in ODAL and it is well-suited for use of compound objects. Simplified table and class hierarchies describing Class Table Inheritance are shown below.

Let us take a look at how this pattern is implemented in ODAL.

Compound composite objects are shown in pink, basic that map one-to-one to the database tables are in yellow. As you can see, PoliceCar and TaxiCar classes inherit to Product and contain instances of basic PoliceCarPO and TaxiCarPO, respectively. PoliceCar gets all the attributes that the Product has and additional attributes from the PoliceCarPO class.

There are two ways to add child fields to a compound composite: one is with containment type “has”, another – with containment type “is”. In the first case, the generated object would have one accessor and one modifier per child (to get or set PoliceCarPO, in our case). When the “is” containment type is used, the generated object would have all the child class accessors and modifiers added to a resulting composite.

Child entries of a compound object can only be (at least for now) basic persistent objects.

To generate the class hierarchy we have to create a proper entry in the composite descriptor file. Below is a sample of a composite descriptor with the fragment that produces desired object hierarchy,

#

# Descriptor to generate test complex & compound objects

#

{

.................

objectsReferences = {

#-----------------------------------------------------------------------#

# Compound delegating factory objects:

#-----------------------------------------------------------------------#

CPD_PRODUCT = {

className = "Product"

#interfaceName = "ICpdTestProduct"

base = {

name = PRODUCT # Required: Name of one of objectsReferences from this file

# or alias from external descriptor

# className = "ProductPO" # Optional

}

compound = {

delegateFactory = {

className = com.completex.objective.components.persistency.core.impl.CompoundDelegateFactoryImpl

delegatePersistentObjects = [ "com.Product"

"com.TaxiCar"

"com.PoliceCar" ]

delegateValues = [ "product" "taxiCar" "policeCar" ]

discriminatorColumnName = "ProductPO.COL_NAME"

}

}

}

#-----------------------------------------------------------------------#

# Compound object:

#-----------------------------------------------------------------------#

CPD_TAXI_CAR = {

className = "TaxiCar"

#interfaceName = "ICpdTestCar"

base = {

name = CPD_PRODUCT # Required: Name of one of objectsReferences from this file

# or alias from external descriptor

# className = com.Product # Optional class name

}

compound = {

children = {

taxiCarAttr = {

ref = {

name = TAXI_CAR_PO # Required: Name of

# the alias from external descriptor

className = com.TaxiCarPO # Optional class name

}

cascadeInsert = TRUE

cascadeDelete = TRUE

containmentType = is

}

}

}

}

#-----------------------------------------------------------------------#

# Compound object:

#-----------------------------------------------------------------------#

CPD_POLICE_CAR = {

className = "PoliceCar" # Generated class name

#interfaceName = "ICpdTestCar"

base = {

name = CPD_PRODUCT # Required: Name of one of objectsReferences from this file

# or alias from external descriptor

# className = XXX # Optional

}

compound = {

children = {

policeCarAttr = {

ref = {

name = POLICE_CAR_PO # Required: Name of

# the alias from external descriptor

className = com.PoliceCarPO

}

cascadeInsert = TRUE

cascadeDelete = TRUE

containmentType = is

}

}

}

}

#-----------------------------------------------------------------------#

} # End of objectsReferences

}

Each object reference in the descriptor file corresponds to a class to generate. Let's take a look at CPD_PRODUCT entry.

CPD_PRODUCT

className – Product, short name of generated class (its package is specified in the generator configuration file, composite-po-config.sdl ).

base – describes the parent of the generated class.

name – name of one of objects references from this file or table entry alias from external descriptor. PRODUCT, in this case, is the alias from the external descriptor.

compound – describes compound features of the composite.

delegateFactory. Presence of that entry means that this class is configured to instantiate other classes.

className – full name of the delegate factory class.

delegatePersistentObjects – array of class names to be instantiated when the values in the column specified in discriminatorColumnName entry is set to corresponding values in a delegateValues array.

delegateValues – see delegatePersistentObjects.

discriminatorColumnName – the table column name which values are used as keys indicating to Persistency what classes are to instantiate. It is a string that gets interpreted either literally or as a the generated constant if it has a '.' character inside. In our case, When the values in ProductPO.COL_NAME (the generated constant) column are "product", "taxiCar", "policeCar", the Persistency will instantiate "com.Product", "com.TaxiCar", "com.PoliceCar" classes, respectively.

Let's take a look CPD_TAXI_CAR object reference.

CPD_TAXI_CAR

className

base

name – CPD_PRODUCT is reference to CPD_PRODUCT entry described earlier.

compound

children – sub-references to basic which fields are to be added to the composite.

taxiCarAttr – sub-reference key. Its value is used to derive field, accessor and modifier names in case of “has” containment type.

ref – describes this sub-reference.

name – name of table entry alias from the external descriptor.

className – optional class name. If not provided – derived from the table entry alias (see previous paragraph).

cascadeInsert – TRUE, indicates that when the parent object is inserted the child will be inserted too.

cascadeDelete – TRUE, indicates that when the parent object is deleted the child will be deleted too.

containmentType = “is” indicates, as we stated before, that all the child class accessors and modifiers added to a resulting composite.

Complex object is a composite with parts being relatively independent allowing for lazy loading, for example. In contrast to a compound, complex object can represent one-to-many and many-to-one as well as one-to-one relationships.

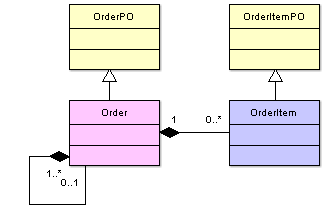

Let us say we have an Order and OrderItem objects as it is shown at the diagram below.

Here OrderPO and OrderItemPO are basic persistent objects. Order is a complex one. It can have several order items and also it has a reference to the parent order which is depicted as a reference to itself.

The corresponding entry in the composite descriptor file is as follows,

CPX_ORDER = {

className = "Order" # Optional - otherwise derived from the key name

# interfaceName = # Optional - otherwise derived from the key name

base = {

name = CUSTOMER_ORDER

className = OrderPO

}

complex = {

children = {

orderItem = {

relationshipType = one_to_many

ref = {

name = ORDER_ITEM

className = com.OrderItem

}

lazy = TRUE

cascadeInsert = TRUE

cascadeUpdate = TRUE

cascadeDelete = TRUE

multipleResultFactory = {

className = com.completex.objective.components.persistency.type.TracingArrayListCollectionFactory

# constructorArgs = [ true true ]

}

}

parentOrder = {

relationshipType = many_to_one

ref = {

name = CUSTOMER_ORDER

className = Order }

lazy = TRUE

cascadeInsert = TRUE

cascadeUpdate = TRUE

cascadeDelete = TRUE

}

}

}

}

Below is an explanation what the sub-entries of the CPX_ORDER mean.

CPX_ORDER

className – Order, short name of generated class (its package is specified in the generator configuration file, composite-po-config.sdl ).

base – describes the parent of the generated class.

name – name of one of objects references from this file or table entry alias from external descriptor. CUSTOMER_ORDER, in this case, is the alias from the external descriptor.

className – OrderPO, optional name of the parent class. If not specified it would have been resolved through the name reference (see previous paragraph).

complex – describes “complex” features of the composite.

children – sub-references to child persistent objects.

orderItem – the 1st sub-reference key. Its value is used to derive field, accessor and modifier names.

relationshipType – one_to_many, relationship to the parent, Order in this case, object. It should correspond to the ones on the database tables level.

ref – describes this sub-reference.

name - ORDER_ITEM, name of table entry alias from the external descriptor.

className – com.OrderItem, name of the basic persistent object or its descendant class.

lazy – TRUE, indicates that order items collection is to be loaded in “lazy” fashion.

cascadeInsert – TRUE, indicates that when the parent object is inserted the child will be inserted too.

cascadeUpdate = TRUE, indicates that when the parent object is updated the child will be updated too.

cascadeDelete = TRUE, indicates that when the parent object is deleted the child will be deleted too.

multipleResultFactory – describes the collection factory to be used when retrieving the order items collection.

className – class name of the collection factory .

parentOrder - the 2nd sub-reference key.

relationshipType – many_to_one, relationship to the order, meaning that one parent order can have several orders.

ref

name – CUSTOMER_ORDER.

className – com.Order.

lazy – TRUE.

cascadeInsert – TRUE.

cascadeUpdate – TRUE.

cascadeDelete – TRUE.

You could notice that many-to-many relationship type was not mentioned when describing composites. It is officially not supported by ODAL. This is done purposely, since many-to-many mappings potentially bring more problems than they resolve. Though, it can be represented in terms of several one-to-many, many-to-one relationships, it is not recommended to do that.

Compound objects cannot have circular dependencies since their child entries always refer to basic persistent objects. Complex objects, on another hand, can have circular dependencies on class level.

When the object first instantiated ODAL pre-compiles its link dependency graph and identifies all the dependencies including the circular ones on the class level. Link dependency graph is used whenever the object is retrieved in non-lazy manner, so the framework knows where to stop the retrieval without going into infinite loop.

Consider an example where we have class A that contains child named “slave” referencing class B – {slave=B} which, in its turn, has a child {master=A}. When dependency graph gets pre-compiled, ODAL collects {A, B, slave} link, {B, A, master} link, then it encounters {A, B, slave} link again and marks it “the end of the chain”. On load of A, sequence A -> B will only be retrieved once.

When the class references itself, like when A has a child {link=A}, the sequence A->A will also be retrieved once.

It should be mentioned that even though ODAL supports circular dependencies, we would strongly advice against using them, except may be for cases of self-dependencies. Circular dependencies, in general, complicate object structure limiting their re-usability and reducing maintainability. With no circular dependencies you can freely use inheritance. In case of circularly dependent objects, you have to make sure that the classes in mutual references are the same (not just some their descendants) since otherwise the executed query chain may be longer that you expected.

ODAL does not keep an internal object cache and hence, if you retrieve object A{id=1} several times, the queries will also be executed several times each time returning a new A instance. As a consequence, bi-directional dependencies on object level are not supported.

Composites can combine compound and complex features. We can imagine, for instance, PoliceCar class, defined before as a compound object, having a tree of extra attributes which could be described in terms of complex features. Every such a combination should be thoroughly thought through since the more complicated the object becomes the less chances you have to reuse it and the less control you have over it.

There two other types of worth mentioning here. One of them is CompoundPersistentObject. CompoundPersistentObject is convenient when you have to retrieve rows of data consisting of records coming from different tables. Internally, it contains an array of persistent objects. You can combine the generated, strictly typed, with generic ones of AdHocPersistentObject type.

Let's say the result of the query exactly matches records of Customer and Order but contains one extra field, rownum, for example. The CompoundPersistentObject that can serve as a container for this data can be instantiated as follows,

AdHocPersistentObject adHocPersistentObject = new AdHocPersistentObject(1);

adHocPersistentObject.setColumnName(0, "rownum");

CompoundPersistentObject singularResultFactory = new CompoundPersistentObject(new PersistentObject[]{new Customer(), new Order(), adHocPersistentObject});

We used here AdHocPersistentObject to retrieve “rownum” column that does not exist in any of the generated objects. It will be discussed later in more details.

implement java.io.Externalizable interface methods. You can choose to use them in which case

implements="java.io.Externalizable"

must be set in the generator configuration file. It is set by default in the configuration template file. When the default serialization mechanism is used, serialized, modified and serialized back “remember” their original as well as modified states which allows for selective field update without synchronization with a database. Flattened cannot be serialized through Externalizable interface methods.

You can serialize flattened using simple Java serialization. In that case you have to set

implements="java.io.Serializable"

in the generator configuration file.

Alternatively, you may choose to implement your own serialization mechanism.

ODAL provides PoOutputStream and PoInputStream (see com.completex.objective.components.persistency.io.obj.impl package) implementations of ObjectOutput and ObjectInput interfaces respectively that allow to specify the serialization mode – preserving original values or not – while using non-flattened persistent objects.

also can be serialized in XML format using XmlPersistentObjectOutputStream and XmlPersistentObjectInputStream implementations of ObjectOutput and ObjectInput interfaces (see the API for more information).

Transaction has to be created before any database operation. Transactions are created through TransactionManager interface. ODAL transaction combines features of both database session and database transaction. That means that when you call Transaction.commit() or Transaction.rollback() methods transaction does not die. You can repeatedly call Transaction.commit() the same way you do it in database session. To finalize transaction you have to call commit or rollback on TransactionManager at which point the transaction gets released and returned back to the pool.

TransactionManager supports flat as well as pseudo-nested transactions. Below is an example that features use of pseudo-nested transactions,

public void createCustomerWithProfile() throws CustomerException {

Transaction transactionOuter = null;

Transaction transactionInner = null;

try {